DBMS, January 1998

One of the most widely debated issues in data warehousing is how to go about planning the warehouse construction. Do we build the whole data warehouse all at once from a central, planned perspective (the monolithic approach) or do we opportunistically build separate subject areas whenever we feel like it (the stovepipe approach)? In this article I set out to demolish two powerful myths. First, nobody believes in a totally monolithic approach, and yet nobody defends a totally stovepipe approach either. All the leading data warehouse practitioners use some kind of architectural step-by-step approach to build an enterprise data warehouse. This article describes a specific variation of that step-by-step approach called "supermarts." A supermart is a data mart that has been carefully built with a disciplined architectural framework.

The second myth I want to demolish is that we have passed out of the phase in data warehouse development where a data mart must be restricted to a highly aggregated subset of a nonqueryable data warehouse. This view of data marts is the source of many problems and misunderstandings. You will see that a supermart is naturally a complete subset of the overall data warehouse and that every useful supermart must be based on the most granular (atomic) data that can possibly be collected and stored.

The Planning Crisis

The task of planning an enterprise data warehouse is daunting. The newly appointed manager of the data warehousing effort in a large enterprise is faced with two huge and seemingly unrelated challenges. On the one hand, the manager is supposed to understand the content and location of the most complicated asset owned by the enterprise: the legacy data. Somehow (usually overnight) the new data warehouse manager is supposed to become an authority on exactly what is contained in all those VSAM, ISAM, IMS, DB2, and Oracle tables. Every field in every table must be understood. The data warehouse manager must be able to retrieve any such element of data and, if necessary, clean it up and correct it. If all this werenýt enough, the data warehouse manager is also supposed to understand exactly what keeps management awake at night. The data warehouse is expected to contain the exact data needed to answer everyoneýs burning questions. Of course, the data warehouse manager is "free" to drop in on senior management at any time to discuss current corporate priorities. Just make sure you get this data warehouse done pretty soon.

The pressure of this daunting task has built up to the point where it has a name: data mart. Regardless of specific definitions, the phrase "data mart" means avoiding the impossibility of tackling the enterprise data warehouse-planning job all at once. Data warehouse planners take refuge in carving off a little piece of the whole data warehouse, bringing it to completion, and calling it a data mart.

Unfortunately, in many cases building separate data marts rather than a single data warehouse has become an excuse for ignoring any kind of design framework that might tie the data marts together. Vendorsý marketing claims for a "data mart in a box" and a "15-minute data mart" are pandering to the marketýs need for a simple solution, but these claims are a real disservice to the data warehousing manager who must make these data marts fit together into a coherent whole.

Isolated stovepipe data marts that cannot be tied together usefully are the bane of the data warehouse movement. They are much worse than a simple lost opportunity for analysis. Stovepipe data marts perpetuate incompatible views of the enterprise. Stovepipe data marts enshrine the reports that cannot be compared with each other. And stovepipe data marts become legacy implementations in their own right, where, by their very existence, they block the development of an integrated enterprise data warehouse. Some people have begun calling these legacy data marts "lega marts." So if building the data warehouse all at once is too daunting, and building it as isolated pieces defeats the overall goal, what is to be done?

Data Marts with an Architecture

The answer to this dilemma is to start the data warehouse planning process with a short overall architecture phase that has finite and specific goals. Next, follow this architecture phase with a step-by-step implementation of separate data marts where each implementation step closely adheres to the architecture. In this way the data warehouse manager gets the best of both worlds. The architecture phase produces specific guidelines that the separate data mart development teams can follow, and the data mart development teams can work fairly independently and asynchronously. As the separate data marts come online, they will fit together like the pieces of a puzzle. We call these coordinated data marts supermarts. At some point, enough supermarts exist to make good on the promise of an integrated enterprise data warehouse.

To succeed in building an enterprise data warehouse you must inevitably perform the following two steps: First, create a surrounding architecture that defines the scope and implementation of the complete data warehouse, and second, oversee the construction of each piece of the complete data warehouse. Now stop and consider the second step. The biggest task in constructing a data warehouse is designing the extract system. This is the mechanism that gets the data from a specific legacy system and moves it into the data staging area, where it can be transformed into the various load record images needed by the final database that presents the data for querying. Since the implementation of the extract logic is largely specific to each original data source, it really doesnýt matter whether you think of the task as a whole or break it into pieces. Either way, you have to put your pants on one leg at a time. You will in effect be implementing your data marts one at a time no matter how you plan your project.

Conformed Dimensions and Standard Fact Definitions

In the architecture phase that precedes the implementation of any data mart, the goals are to produce a master suite of conformed dimensions and standardize the definitions of facts. I am assuming you have a proper dimensional design for all the data marts. Any given data mart is assumed to consist of a set of fact tables, each with a multipart key made up of dimension key components (foreign keys joining to the dimension tables). The fact tables all contain zero or more facts that represent measurements taken at each combination of the dimension key components. Every fact table is surrounded by a "halo" of dimension tables, where the dimension keys are primary keys in each respective dimension table. This design is also called a star join. If you are not familiar with dimensional modeling, there are many references on this subject. See, for example, my article "A Dimensional Modeling Manifesto," DBMS, August 1997.

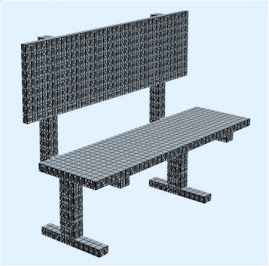

A conformed dimension is a dimension that means the same thing with every possible fact table to which it can be joined. Generally this means that a conformed dimension is identical in each data mart. Examples of obvious conformed dimensions include Customer, Product, Location, Deal (Promotion), and Calendar (Time). (See Figure 1.) A major responsibility of the central data warehouse design team is to establish, publish, maintain, and enforce the conformed dimensions.

Establishing a conformed dimension is a very significant step. A conformed Customer dimension is a master table of customers with a clean customer key and many well-maintained attributes describing each customer. It is likely that the conformed Customer dimension is an amalgamation and distillation of data from several legacy systems and possibly outside sources. The address fields in the Customer dimension, for instance, should constitute the best mailable address that is known for each customer anywhere within the enterprise. It is often the responsibility of the central data warehouse team to create the conformed Customer dimension and provide it as a resource to the rest of the enterprise, both for legacy and data warehouse use. I described this special role for the central data warehouse team in more detail in my Data Warehouse Architect column in DBMS, June 1997.

The conformed Product dimension is the enterpriseýs agreed-upon master list of products, including all product attributes and all product rollups such as category and department. A good Product dimension, like a good Customer dimension, should have at least 50 separate textual attributes.

Ideally, the conformed Location dimension should be based on specific points on the map, like specific street addresses or even precise latitudes and longitudes. Specific points in space roll up to every conceivable geographic hierarchy, including city-county-state-country, as well as ZIP codes and idiosyncratic sales territories and sales regions.

The conformed Calendar dimension will almost always be a table of individual days, spanning a decade or more. Each day will have many useful attributes drawn from the legal calendars of the various states and countries the enterprise deals with, as well as special fiscal calendar periods and marketing seasons relevant only to internal managers.

Conformed dimensions are enormously important to the data warehouse. Without a strict adherence to conformed dimensions, the data warehouse cannot function as an integrated whole. If a dimension like Customer or Product is used in an nonconformed way, then either the separate data marts cannot be used together or, worse yet, attempts to use them together will produce incorrect results. To state this more positively, conformed dimensions make possible a single dimension table to be used against multiple fact tables in the same database space, consistent user interfaces and data content whenever the dimension is used, and a consistent interpretation of attributes and, therefore, rollups across data marts.

Designing Conformed Dimensions

The task of identifying and designing the conformed dimensions should take a few weeks. Most conformed dimensions will naturally be defined at the most granular level possible. The grain of the Customer dimension will be the individual customer. The grain of the Product dimension will be the lowest level at which products are tracked in the source systems. The grain of the Time dimension will usually be individual day. Those systems also tracking specific Time-of-Day should probably make Time-of-Day a separate dimension.

Conformed dimensions should almost always have an anonymous data warehouse key that is not a production system key from one of the legacy systems. There are many reasons for the data warehouse keys to be independent from production. The administrative goals of the production systems are not the same as those of the data warehouse. Sooner or later, the production system will step on the data warehouse, either by reusing the same key or by changing the administrative assumptions in some way. Also, the data warehouse has to produce generalized keys for various situations, including the problem of slowly changing dimensions. For a discussion of slowly changing dimensions, see my April 1996 DBMS column.

The Data Staging Area

A good conformed dimension is often a combination of attributes from multiple sources. In the data staging area of the data warehouse, the contributions from these multiple sources are merged under the overall data warehouse key for each record, such as Customer. The main activities of the data staging area are sorting, cleaning, looking up, checking relationships, and merging. An interesting and provocative view is that these data staging activities are not a very good match for relational databases. Data staging is more about processing sequential files on a single pass under program control. In a future article, I will propose some technologies and architectures for data staging that avoid the investment and overhead of a full-blown relational DBMS for this step of the extract pipeline.

Taking the Pledge

If the central data warehouse team succeeds in defining and providing a set of master conformed dimensions for the enterprise, it is extremely important for the separate data mart teams actually to use these dimensions. The commitment to use the conformed dimensions is much more than a technical decision. It is a business-policy decision that is key to making the enterprise data warehouse function. The use of the conformed dimensions should be supported at the highest executive levels. This issue should be a sound bite for the enterprise CIO.

Using data effectively in a large enterprise is intimately connected to how the enterprise is organized and how it communicates internally. The data warehouse is the vehicle for delivering the data to all the affected parties. Changing the way an enterprise is organized, how it communicates, and how it uses its data assets is mainline business reengineering. The CIO should make all the separate data mart teams "take the pledge" always to use the conformed dimensions.

Permissible Variations of Conformed Dimensions

It is possible to create a subset of a conformed dimension table for certain data marts if you know that the domain of the associated fact table only contains that subset. For example, the master Product table can be restricted to just those products manufactured at a particular location if the data mart in question pertains only to that location. We could call this a simple data subset, because the reduced dimension table preserves all the attributes of the original dimension and exists at the original granularity.

A rollup data subset systematically removes both rows and columns from the original dimension table. For example, it is common to restrict the Calendar table from days down to months. In this case we may only keep the record describing the first day of each month, but we must also remove all those attributes like Day-of-Week and Holiday-Flag that only make sense at a daily grain.

Perhaps you are wondering how to create queries in an environment where the conformed dimensions can be subsetted. Which dimension table should be used where? Actually, it is much simpler than it sounds. Each dimension table is paired with its companion fact table in a particular data mart. Any application that drills across data marts must inevitably use multipass SQL to query each supermart separately and in sequence. It is usually the case that a separate SQL query is generated for each column in a drill-across report. The beauty of using conformed dimensions is that the report will run to completion if and only if the dimension attributes used in the report are found in each dimension table. Since the dimensions are conformed, the business answers are guaranteed to be consistent. The numbers will also be comparable if we have established standard fact definitions.

Establishing the Standard Fact Definitions

I have talked thus far about the central task of setting up conformed dimensions to tie supermarts together. This is 80 percent of the up-front architectural effort. The remaining 20 percent is establishing standard fact definitions.

Fortunately, the task of identifying the standard fact definitions is done at the same time as the identification of the conformed dimensions. You need standard fact definitions when you use the same terminology across data marts and when you build single reports that drill across the data marts as described in the previous section.

Examples of facts that must be standardized include Revenue, Profit, Standard Prices, and Standard Costs. The underlying equations that derive these measures must be the same if they are to be called the same thing. These standard fact definitions need to be defined in the same dimensional context and with the same units of measurement from data mart to data mart. Revenues and profits need to be reported in the same time periods and in the same geographies.

Sometimes a fact has a natural unit of measure in one fact table and another natural unit of measure in another fact table. For example, the flow of a product down a typical manufacturing value chain may best be measured in shipping cases at the manufacturer but should be measured in scanned units at the retailer. Even if all the dimensional considerations have been correctly taken into account, it would be difficult to use these two incompatible units of measure in one drill-across report. The usual solution to this is to refer the user to a conversion factor buried in the product dimension table and hope the user can find the conversion factor and use it correctly. This is unacceptable overhead in my opinion. The correct solution is to carry the fact in both shipping cases and in scanned units in the manufacturerýs table or in a view of the manufacturerýs table. That way a report can easily glide down the value chain, picking off comparable facts.

If it is difficult or impossible to standardize a fact exactly, then care must be taken to give the different interpretations different names. We must distinguish Month End Revenue from Billing Cycle Revenue. The most serious mistake is to call both of these facts Revenue.

The Importance of Granularity

The conformed dimensions will usually be granular because each record in these tables most naturally corresponds to a single description of a customer, a product, a promotion, or a day. This makes it quite easy to bring in the associated fact tables at the intersection of all these granular dimensions. In other words, the base-level fact tables in each data mart should be at the natural lowest levels of all the constituent dimensions.

There is tremendous power and resiliency in granular fact-table data. By expressing the bedrock data of the data mart at the lowest grain, the data mart becomes almost impervious to surprises or changes. Such a granular fact table can be gracefully extended by adding newly sourced facts, newly sourced dimension attributes, and whole dimensions. When I say "gracefully extended," I mean specifically that all old queries and applications continue to run after a graceful change has been made; no fact tables have to be dropped and reloaded, and no keys have to be changed. This notion of graceful extension is one of the strong characteristics of the dimensional modeling approach. I discussed this in detail in the September 1997 "Manifesto" article mentioned earlier.

When the fact tables are granular, they serve as the natural destination for current operational data that may be extracted frequently from the operational systems. The current rolling snapshot of an operational system finds a happy home in the granular fact table defined at the Account by Day by Transaction level. In this issue, my regular column (See Data Warehouse Architect) describes two companion tables, the Transaction and Snapshot fact tables, that should be the bedrock foundation of many data marts.

A new and growing justification for extremely granular data is the desire to do data mining and to understand customer behavior. Data mining is generally much less effective on aggregated data. Well, suppose we agree with all this but we donýt have enough room on our data mart server to store the big granular fact tables. Does this invalidate the whole approach of this article?

No! This dilemma is simply a matter of terminology. Let us broaden the definition of data mart (or supermart) to include all the fact tables and dimension tables associated with a business process, regardless of where they are physically stored. We are moving so rapidly into a network-oriented view of our data warehouses that tying the definition of a data mart to a specific hardware box is unnecessarily restricting. The queries against our data marts are increasingly being handled by a navigator layer that sorts out the physical location of the data based on the details of the userýs request. If the user calls for relatively aggregated data, perhaps that data is on a local server. But if the user calls for more detailed data, we switch to a larger centralized machine at another location. There are many reasons for encouraging this kind of indirection at the physical storage level, because it gives the back-room DBAs much more flexibility to mix and match hardware without worrying about tweaking the usersý applications.

Higher-Level Data Marts

This article has mostly focused on first-level data marts that are recognizable images of legacy applications. In other words, if we have an Orders system, then we have an Orders data mart. If we have a Payments and Collections system, then we have a Payments and Collections data mart.

I recommend starting with these kinds of first-level data marts because I believe this minimizes the risk of signing up to an implementation that is too ambitious. Most of the risk comes from biting off too big an extract programming job. Also, in many cases an efficiently implemented first-level data mart will provide users with enough interesting data to keep them happy and quiet while the data mart teams keep working on harder issues.

After several first-level data marts have been implemented, it is reasonable to combine these data marts into a second-level data mart. The classic example of a second-level data mart is the Profitability data mart, where separate components of revenue and cost are combined to allow a complete view of profitability. Profitability data marts at a granular level are tremendously exciting because they can be rolled up through the Customer dimension to generate customer profitability. They can be rolled up through the Product dimension to generate product profitability. And they can be rolled up through the Promotion dimension to generate promotion profitability. The lesson here is to be disciplined and not try to bring up a complete Profitability data mart on the first try. Otherwise you will drown in extract programming as you struggle to source all the separate components of revenue and cost. If you are absolutely forced to bring up profitability in your first data mart then you may need to assign costs with simple rule-of-thumb allocations rather than by doing the complete job of sourcing all the underlying cost detail. Later, when you have time to source the cost detail correctly, you can bring up an Activity Based Profitability second-level data mart to supersede your first-level data mart.

Rescuing Stovepipes

Can you rescue your stovepipes and convert them into supermarts? You can do this only if the dimensions used in the stovepipes can be mapped one-to-one or one-to-many with the proper conformed dimensions. If so, then the stovepipe dimension can be replaced with the conformed dimension. In some cases the conformed dimension can gracefully inherit some of the special attributes of the stovepipe dimension. But usually a stovepipe data mart has one or more dimensions that cannot easily be mapped into the conformed dimensions. The stovepipe sales geographies may be incompatible with the conformed sales geographies. Be careful about assuming that you can decompose something simple like stovepipe weeks back into conformed dimension days. While this sounds simple logically, you are probably making an unfounded assumption that you can spread a stovepipe week over weekdays and then turn around and add up again into months. As difficult as it is to admit, most of the time the stovepipe data marts must be shut down and rebuilt in the proper conformed dimensional framework.

When You Donýt Need Conformed Dimensions

If your customers and products are disjointed and you donýt manage your separate business lines together, then there is little point in building a data warehouse that tightly integrates these businesses. For example, if you are a conglomerate business whose subsidiaries span food businesses, hardware businesses, and services, it probably doesnýt make sense to think of your customers as leveraging your brand name from product to product or service to service. Even if you tried to build a set of conformed Product and Customer dimensions in a single data warehouse spanning all the lines of business, most of the reports would end up weirdly "diagonalized" with the data from each business line in rows and columns not shared by the other lines. In this case your data warehouse would mimic your management structure, and you would build separate and self-contained data warehouses for each subsidiary.

Clear Vision

In this article, I have described a rational approach to planning an enterprise data warehouse. We have achieved the best of two worlds. We have created an architectural framework with supermarts that guides the overall design, but we have divided the problem into bite-sized chunks that can be implemented by real human beings. Along the way, we have adjusted the conventional definitions of the data mart, calling it a supermart. We see now that a supermart is a complete subset of the overall data warehouse, physically distributed across multiple hardware and software platforms, and always based on the most granular data possible we can extract from our legacy systems. Every supermart is a family of similar tables sharing conformed dimensions. These conformed dimensions have a uniform interpretation across the enterprise. Finally, we see the overall data warehouse for what it is: a collection of separately implemented supermarts bound together with a powerful architecture based on conformed dimensions and standardized facts.

An example of two data marts with conformed dimensions (Time, Customer, Product, and Promotion). For example, these two data marts can work together to produce a combined report with Product and Promotion as row headers.

Ralph Kimball was co-inventor of the Xerox Star workstation, the first commercial product to use mice, icons, and windows. He was vice president of applications at Metaphor Computer Systems and is the founder and former CEO of Red Brick Systems. He now works as an independent consultant designing large data warehouses. He is the author of The Data Warehouse Toolkit: How to Design Dimensional Data Warehouses (Wiley, 1996). You can reach Ralph through his Web page at http://www.rkimball.com/.

What did you think of this article? Send a letter to the editor.

January 1998 Table of Contents Other Contents Article Index Search Site Index Home

DBMS and Internet Systems (http://www.dbmsmag.com/)

Copyright © 1998 Miller Freeman, Inc. ALL RIGHTS RESERVED

Redistribution without permission is prohibited.

Nenhum comentário:

Postar um comentário